一、检查本地系统环境

1.检查系统版本

[root@Server001 ~]# cat /etc/os-release

NAME="CentOS Linux"

VERSION="7 (Core)"

ID="centos"

ID_LIKE="rhel fedora"

VERSION_ID="7"

PRETTY_NAME="CentOS Linux 7 (Core)"

ANSI_COLOR="0;31"

CPE_NAME="cpe:/o:centos:centos:7"

HOME_URL="https://www.centos.org/"

BUG_REPORT_URL="https://bugs.centos.org/"

CENTOS_MANTISBT_PROJECT="CentOS-7"

CENTOS_MANTISBT_PROJECT_VERSION="7"

REDHAT_SUPPORT_PRODUCT="centos"

REDHAT_SUPPORT_PRODUCT_VERSION="7"2.查看服务器网卡

[root@Server001 network-scripts]# ifconfig -a

bond0: flags=5123<UP,BROADCAST,MASTER,MULTICAST> mtu 1500

inet 192.168.30.122 netmask 255.255.255.0 broadcast 192.168.30.255

ether a6:ad:e5:84:f0:6e txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.3.55 netmask 255.255.255.0 broadcast 192.168.3.255

inet6 fe80::2a6e:d4ff:fe89:8720 prefixlen 64 scopeid 0x20<link>

ether 28:6e:d4:89:87:20 txqueuelen 1000 (Ethernet)

RX packets 2256 bytes 439140 (428.8 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 428 bytes 68770 (67.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::2a6e:d4ff:fe8a:3299 prefixlen 64 scopeid 0x20<link>

ether 28:6e:d4:8a:32:99 txqueuelen 1000 (Ethernet)

RX packets 1617 bytes 386452 (377.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 7 bytes 586 (586.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::2a6e:d4ff:fe88:f490 prefixlen 64 scopeid 0x20<link>

ether 28:6e:d4:88:f4:90 txqueuelen 1000 (Ethernet)

RX packets 1617 bytes 386452 (377.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 7 bytes 586 (586.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

二、创建网卡配置文件

1.进入网卡配置文件目录

[root@Server001 ~]# cd /etc/sysconfig/network-scripts/

[root@Server001 network-scripts]# ls

ifcfg-bond0 ifdown-eth ifdown-ppp ifdown-tunnel ifup-ippp ifup-post ifup-TeamPort network-functions-ipv6

ifcfg-eth0 ifdown-ippp ifdown-routes ifup ifup-ipv6 ifup-ppp ifup-tunnel

ifcfg-lo ifdown-ipv6 ifdown-sit ifup-aliases ifup-isdn ifup-routes ifup-wireless

ifdown ifdown-isdn ifdown-Team ifup-bnep ifup-plip ifup-sit init.ipv6-global

ifdown-bnep ifdown-post ifdown-TeamPort ifup-eth ifup-plusb ifup-Team network-functions

2.拷贝eth0的网卡配置文件

[root@Server001 network-scripts]# cp ifcfg-eth0 ifcfg-eth1

[root@Server001 network-scripts]# cp ifcfg-eth0 ifcfg-eth2

[root@Server001 network-scripts]# cp ifcfg-eth0 ifcfg-bond03.修改bond0网卡配置文件

[root@Server001 network-scripts]# cat ifcfg-bond0

DEVICE=bond0

BOOTPROTO=none

TYPE=bond0

ONBOOT=yes

IPADDR=192.168.30.122

NETMASK=255.255.255.0

4.修改eth1网卡配置文件

[root@Server001 network-scripts]# cat ifcfg-eth1

DEVICE=eth1

BOOTPROTO=none

TYPE=Ethernet

MASTER=bond0

SLAVE=yes

5.修改eth2网卡配置文件

[root@Server001 network-scripts]# cat ifcfg-eth2

DEVICE=eth2

BOOTPROTO=none

TYPE=Ethernet

MASTER=bond0

SLAVE=yes

三、创建bonding的配置文件

1.编辑bonding.conf

[root@node network-scripts]# vim /etc/modprobe.d/bonding.conf

[root@node network-scripts]# cat /etc/modprobe.d/bonding.conf

alias bond0 bonding

options bonding mode=1 miimon=100

注:关于mode的说明 mode=0 //平衡循环 mode=1 //主备 mode=3 //广播 mode=4 //链路聚合

2.停止 NetworkManager 服务

systemctl stop NetworkManager

systemctl disable NetworkManage3.加载 bonding 模块

[root@Server001 network-scripts]# lsmod |grep bonding

[root@Server001 network-scripts]# modprobe bonding

[root@Server001 network-scripts]# lsmod |grep bonding

bonding 152656 0 4.重启网络服务

systemctl restart network四、查看网卡绑定情况

1.再次检查本地网卡

[root@Server001 network-scripts]# ifconfig

bond0: flags=5187<UP,BROADCAST,RUNNING,MASTER,MULTICAST> mtu 1500

inet 192.168.30.122 netmask 255.255.255.0 broadcast 192.168.30.255

inet6 fe80::2a6e:d4ff:fe8a:3299 prefixlen 64 scopeid 0x20<link>

ether 28:6e:d4:8a:32:99 txqueuelen 1000 (Ethernet)

RX packets 2426 bytes 748394 (730.8 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 13 bytes 838 (838.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.3.55 netmask 255.255.255.0 broadcast 192.168.3.255

inet6 fe80::2a6e:d4ff:fe89:8720 prefixlen 64 scopeid 0x20<link>

ether 28:6e:d4:89:87:20 txqueuelen 1000 (Ethernet)

RX packets 2853 bytes 740694 (723.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 478 bytes 75189 (73.4 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500

ether 28:6e:d4:8a:32:99 txqueuelen 1000 (Ethernet)

RX packets 2229 bytes 689858 (673.6 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 13 bytes 838 (838.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth2: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500

ether 28:6e:d4:8a:32:99 txqueuelen 1000 (Ethernet)

RX packets 2243 bytes 690766 (674.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

2.查看网卡绑定状态

[root@Server001 network-scripts]# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: eth1

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eth1

MII Status: up

Speed: Unknown

Duplex: Unknown

Link Failure Count: 0

Permanent HW addr: 28:6e:d4:8a:32:99

Slave queue ID: 0

Slave Interface: eth2

MII Status: up

Speed: Unknown

Duplex: Unknown

Link Failure Count: 0

Permanent HW addr: 28:6e:d4:88:f4:90

Slave queue ID: 0

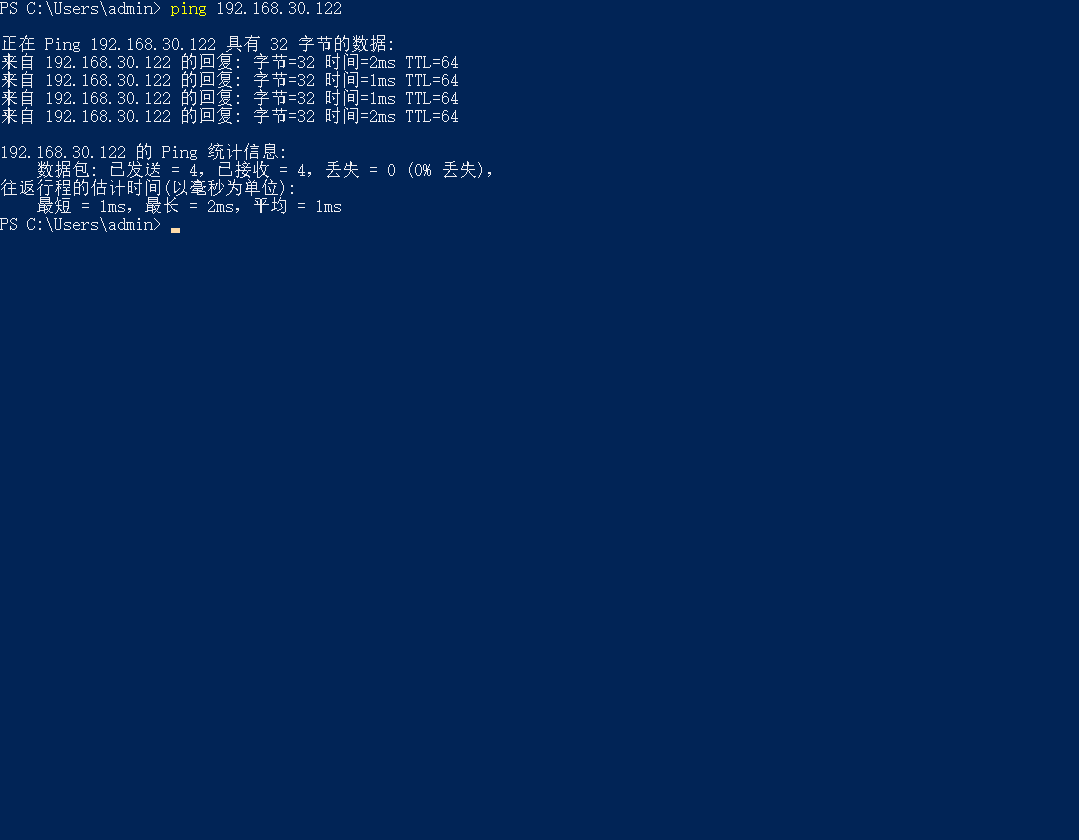

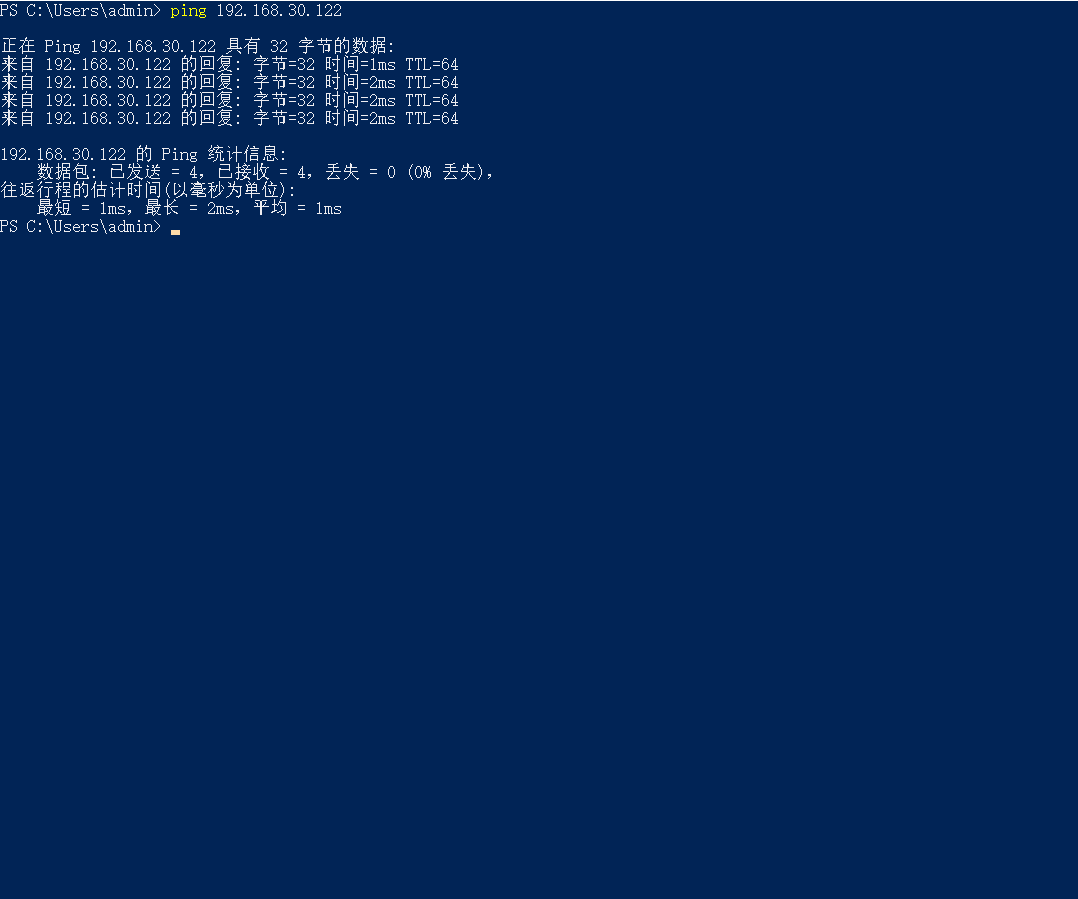

五、测试网卡连通情况

1.本地客户端ping服务器

ping 192.168.30.122

六、关闭eth1网卡测试连通情况

1.关闭eth1网卡

[root@Server001 network-scripts]# ifdown eth1

[root@Server001 network-scripts]#

2.查看本地客户端连通情况

可以正常ping通

3.查看当前的bond0状态

当前活动网卡已经切换到eth2,eth2网卡提供服务

[root@Server001 network-scripts]# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: eth2

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eth2

MII Status: up

Speed: Unknown

Duplex: Unknown

Link Failure Count: 0

Permanent HW addr: 28:6e:d4:88:f4:90

Slave queue ID: 0

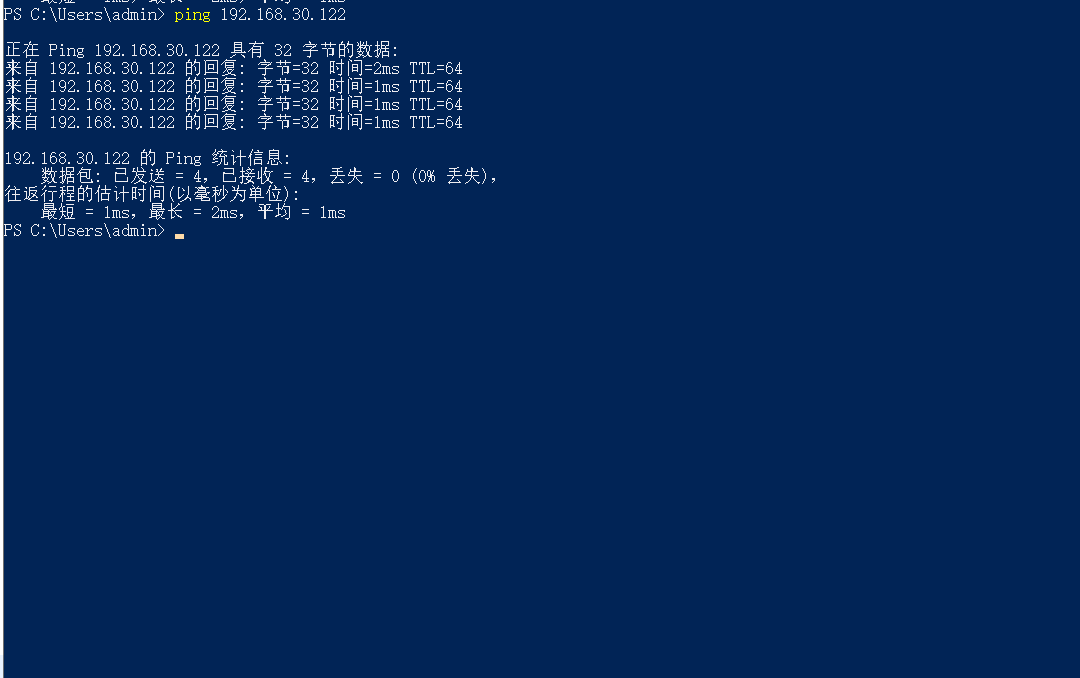

七、关闭eth2网卡测试连通情况

1.开启eth1网卡,关闭eth2网卡

[root@Server001 network-scripts]# ifup eth1

[root@Server001 network-scripts]# ifdown eth22.测试本地客户端连通情况

可以正常ping通

3.查看当前的bond0状态

当前活动网卡已经切换到eth1,eth1网卡提供服务

[root@Server001 network-scripts]# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: eth1

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eth1

MII Status: up

Speed: Unknown

Duplex: Unknown

Link Failure Count: 0

Permanent HW addr: 28:6e:d4:8a:32:99

Slave queue ID: 0