注意力机制系列可以参考前面的一文:

Transformer Block

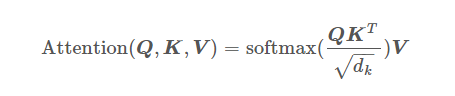

BERT中的点积注意力模型

公式:

代码:

class Attention(nn.Module):

"""

Scaled Dot Product Attention

"""

def forward(self, query, key, value, mask=None, dropout=None):

scores = torch.matmul(query, key.transpose(-2, -1)) \

/ math.sqrt(query.size(-1))

if mask is not None:

scores = scores.masked_fill(mask == 0, -1e9)

# softmax得到概率得分p_atten,

p_attn = F.softmax(scores, dim=-1)

# 如果有 dropout 就随机 dropout 比例参数

if dropout is not None:

p_attn = dropout(p_attn)

return torch.matmul(p_attn, value), p_attn

在 self attention的计算过程中, 通常使用min batch来计算, 也就是一次计算多个句子,多句话得长度并不一致,因此,我们需要按照最大得长度对短句子进行补全,也就是padding零,但这样做得话,softmax计算就会被影响,$e^0=1$也就是有值,这样就会影响结果,这并不是我们希望看到得,因此在计算得时候我们需要把他们mask起来,填充一个负无穷(-1e9这样得数值),这样计算就可以为0了,等于把计算遮挡住。

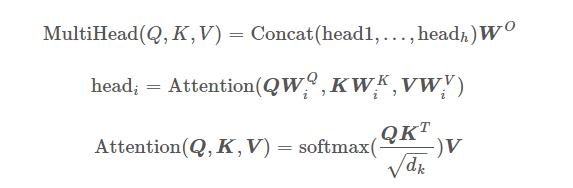

多头自注意力模型

公式:

Attention Mask

代码:

class MultiHeadedAttention(nn.Module):

"""

Take in model size and number of heads.

"""

def __init__(self, h, d_model, dropout=0.1):

# h 表示模型个数

super().__init__()

assert d_model % h == 0

# d_k 表示 key长度,d_model表示模型输出维度,需保证为h得正数倍

self.d_k = d_model // h

self.h = h

self.linear_layers = nn.ModuleList([nn.Linear(d_model, d_model) for _ in range(3)])

self.output_linear = nn.Linear(d_model, d_model)

self.attention = Attention()

self.dropout = nn.Dropout(p=dropout)

def forward(self, query, key, value, mask=None):

batch_size = query.size(0)

# 1) Do all the linear projections in batch from d_model => h x d_k

query, key, value = [l(x).view(batch_size, -1, self.h, self.d_k).transpose(1, 2)

for l, x in zip(self.linear_layers, (query, key, value))]

# 2) Apply attention on all the projected vectors in batch.

x, attn = self.attention(query, key, value, mask=mask, dropout=self.dropout)

# 3) "Concat" using a view and apply a final linear.

x = x.transpose(1, 2).contiguous().view(batch_size, -1, self.h * self.d_k)

return self.output_linear(x)

Position-wise FFN

Position-wise FFN 是一个双层得神经网络,在论文中采用ReLU做激活层:

公式:

注:在 google github中的BERT的代码实现中用Gaussian Error Linear Unit代替了RelU作为激活函数

代码:

class PositionwiseFeedForward(nn.Module):

def __init__(self, d_model, d_ff, dropout=0.1):

super(PositionwiseFeedForward, self).__init__()

self.w_1 = nn.Linear(d_model, d_ff)

self.w_2 = nn.Linear(d_ff, d_model)

self.dropout = nn.Dropout(dropout)

self.activation = GELU()

def forward(self, x):

return self.w_2(self.dropout(self.activation(self.w_1(x))))

class GELU(nn.Module):

"""

Gaussian Error Linear Unit.

This is a smoother version of the RELU.

Original paper: https://arxiv.org/abs/1606.08415

"""

def forward(self, x):

return 0.5 * x * (1 + torch.tanh(math.sqrt(2 / math.pi) * (x + 0.044715 * torch.pow(x, 3))))

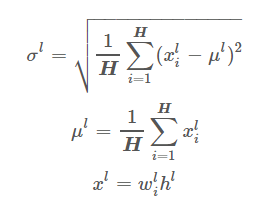

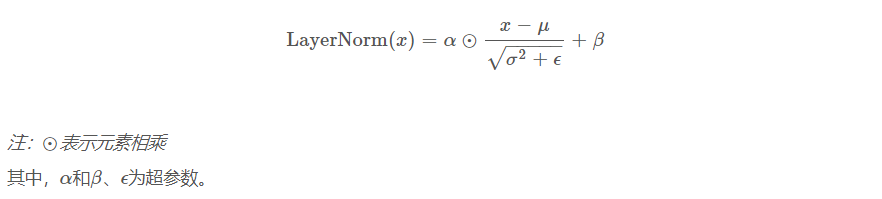

Layer Normalization

LayerNorm实际就是对隐含层做层归一化,即对某一层的所有神经元的输入进行归一化(沿着通道channel方向),使得其加快训练速度:

层归一化公式:

$l$表示第L层,H 是指每层的隐藏单元数(hidden unit),$\mu$表示平均值,$\sigma$表示方差, $\alpha$表示表征向量,$w$表示矩阵权重。

代码:

class LayerNorm(nn.Module):

"Construct a layernorm module (See citation for details)."

def __init__(self, features, eps=1e-6):

super(LayerNorm, self).__init__()

self.a_2 = nn.Parameter(torch.ones(features))

self.b_2 = nn.Parameter(torch.zeros(features))

self.eps = eps

def forward(self, x):

# mean(-1) 表示 mean(len(x)), 这里的-1就是最后一个维度,也就是最里面一层的维度

mean = x.mean(-1, keepdim=True)

std = x.std(-1, keepdim=True)

return self.a_2 * (x - mean) / (std + self.eps) + self.b_2

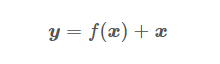

残差连接

残差连接就是图中Add+Norm层。每经过一个模块的运算, 都要把运算之前的值和运算之后的值相加, 从而得到残差连接,残差可以使梯度直接走捷径反传到最初始层。

残差连接公式:

X 表示输入的变量,实际就是跨层相加。

代码:

class SublayerConnection(nn.Module):

"""

A residual connection followed by a layer norm.

Note for code simplicity the norm is first as opposed to last.

"""

def __init__(self, size, dropout):

super(SublayerConnection, self).__init__()

self.norm = LayerNorm(size)

self.dropout = nn.Dropout(dropout)

def forward(self, x, sublayer):

"Apply residual connection to any sublayer with the same size."

# Add and Norm

return x + self.dropout(sublayer(self.norm(x)))

Transform Block

代码:

class TransformerBlock(nn.Module):

"""

Bidirectional Encoder = Transformer (self-attention)

Transformer = MultiHead_Attention + Feed_Forward with sublayer connection

"""

def __init__(self, hidden, attn_heads, feed_forward_hidden, dropout):

"""

:param hidden: hidden size of transformer

:param attn_heads: head sizes of multi-head attention

:param feed_forward_hidden: feed_forward_hidden, usually 4*hidden_size

:param dropout: dropout rate

"""

super().__init__()

# 多头注意力模型

self.attention = MultiHeadedAttention(h=attn_heads, d_model=hidden)

# PFFN

self.feed_forward = PositionwiseFeedForward(d_model=hidden, d_ff=feed_forward_hidden, dropout=dropout)

# 输入层

self.input_sublayer = SublayerConnection(size=hidden, dropout=dropout)

# 输出层

self.output_sublayer = SublayerConnection(size=hidden, dropout=dropout)

self.dropout = nn.Dropout(p=dropout)

def forward(self, x, mask):

x = self.input_sublayer(x, lambda _x: self.attention.forward(_x, _x, _x, mask=mask))

x = self.output_sublayer(x, self.feed_forward)

return self.dropout(x)

Embedding嵌入层

Embedding采用三种相加的形式表示:

代码:

class BERTEmbedding(nn.Module):

"""

BERT Embedding which is consisted with under features

1. TokenEmbedding : normal embedding matrix

2. PositionalEmbedding : adding positional information using sin, cos

3. SegmentEmbedding : adding sentence segment info, (sent_A:1, sent_B:2)

sum of all these features are output of BERTEmbedding

"""

def __init__(self, vocab_size, embed_size, dropout=0.1):

"""

:param vocab_size: total vocab size

:param embed_size: embedding size of token embedding

:param dropout: dropout rate

"""

super().__init__()

self.token = TokenEmbedding(vocab_size=vocab_size, embed_size=embed_size)

self.position = PositionalEmbedding(d_model=self.token.embedding_dim)

self.segment = SegmentEmbedding(embed_size=self.token.embedding_dim)

self.dropout = nn.Dropout(p=dropout)

self.embed_size = embed_size

def forward(self, sequence, segment_label):

x = self.token(sequence) + self.position(sequence) + self.segment(segment_label)

return self.dropout(x)

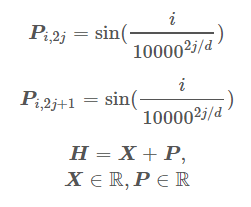

位置编码(Positional Embedding)

位置嵌入的维度为 [𝑚𝑎𝑥 𝑠𝑒𝑞𝑢𝑒𝑛𝑐𝑒 𝑙𝑒𝑛𝑔𝑡ℎ, 𝑒𝑚𝑏𝑒𝑑𝑑𝑖𝑛𝑔 𝑑𝑖𝑚𝑒𝑛𝑠𝑖𝑜𝑛] , 嵌入的维度同词向量的维度, 𝑚𝑎𝑥 𝑠𝑒𝑞𝑢𝑒𝑛𝑐𝑒 𝑙𝑒𝑛𝑔𝑡ℎ 属于超参数, 指的是限定的最大单个句长.

公式:

其所绘制的图形:

代码:

class PositionalEmbedding(nn.Module):

def __init__(self, d_model, max_len=512):

super().__init__()

# Compute the positional encodings once in log space.

pe = torch.zeros(max_len, d_model).float()

pe.require_grad = False

position = torch.arange(0, max_len).float().unsqueeze(1)

div_term = (torch.arange(0, d_model, 2).float() * -(math.log(10000.0) / d_model)).exp()

pe[:, 0::2] = torch.sin(position * div_term)

pe[:, 1::2] = torch.cos(position * div_term)

# 对数据维度进行扩充,扩展第0维

pe = pe.unsqueeze(0)

# 添加一个持久缓冲区pe,缓冲区可以使用给定的名称作为属性访问

self.register_buffer('pe', pe)

def forward(self, x):

return self.pe[:, :x.size(1)]

Segment Embedding

主要用来做额外句子或段落划分新够词, 这里加入了三个维度,分别是句子 开头【CLS】,下一句【STEP】,遮盖词【MASK】 例如: [CLS] the man went to the store [SEP] he bought a gallon of milk [SEP]

代码:

class SegmentEmbedding(nn.Embedding):

def __init__(self, embed_size=512):

# 3个新词

super().__init__(3, embed_size, padding_idx=0)

Token Embedding

代码:

class TokenEmbedding(nn.Embedding):

def __init__(self, vocab_size, embed_size=512):

super().__init__(vocab_size, embed_size, padding_idx=0)

BERT

class BERT(nn.Module):

"""

BERT model : Bidirectional Encoder Representations from Transformers.

"""

def __init__(self, vocab_size, hidden=768, n_layers=12, attn_heads=12, dropout=0.1):

"""

:param vocab_size: 所有字的长度

:param hidden: BERT模型隐藏层大小

:param n_layers: Transformer blocks(layers)数量

:param attn_heads: 多头注意力head数量

:param dropout: dropout rate

"""

super().__init__()

self.hidden = hidden

self.n_layers = n_layers

self.attn_heads = attn_heads

# paper noted they used 4*hidden_size for ff_network_hidden_size

self.feed_forward_hidden = hidden * 4

# 嵌入层, positional + segment + token

self.embedding = BERTEmbedding(vocab_size=vocab_size, embed_size=hidden)

# 多层transformer blocks

self.transformer_blocks = nn.ModuleList(

[TransformerBlock(hidden, attn_heads, hidden * 4, dropout) for _ in range(n_layers)])

def forward(self, x, segment_info):

# attention masking for padded token

# torch.ByteTensor([batch_size, 1, seq_len, seq_len)

mask = (x > 0).unsqueeze(1).repeat(1, x.size(1), 1).unsqueeze(1)

# embedding the indexed sequence to sequence of vectors

x = self.embedding(x, segment_info)

# 多个transformer 堆叠

for transformer in self.transformer_blocks:

x = transformer.forward(x, mask)

return x

语言模型训练的几点技巧

BERT如何做到自训练的,一下是几个小tip,让其做到自监督训练:

Mask

随机遮盖或替换一句话里面任意字或词, 然后让模型通过上下文的理解预测那一个被遮盖或替换的部分, 之后做𝐿𝑜𝑠𝑠的时候只计算被遮盖部分的𝐿𝑜𝑠𝑠。

随机把一句话中 15% 的 𝑡𝑜𝑘𝑒𝑛 替换成以下内容:

- 这些 𝑡𝑜𝑘𝑒𝑛 有 80% 的几率被替换成 【𝑚𝑎𝑠𝑘】 ;

- 有 10% 的几率被替换成任意一个其他的 𝑡𝑜𝑘𝑒𝑛 ;

- 有 10% 的几率原封不动.

让模型预测和还原被遮盖掉或替换掉的部分,损失函数只计算随机遮盖或替换部分的Loss。

代码:

class MaskedLanguageModel(nn.Module):

"""

predicting origin token from masked input sequence

n-class classification problem, n-class = vocab_size

"""

def __init__(self, hidden, vocab_size):

"""

:param hidden: output size of BERT model

:param vocab_size: total vocab size

"""

super().__init__()

self.linear = nn.Linear(hidden, vocab_size)

self.softmax = nn.LogSoftmax(dim=-1)

def forward(self, x):

return self.softmax(self.linear(x))

预测下一句

代码:

class NextSentencePrediction(nn.Module):

"""

2-class classification model : is_next, is_not_next

"""

def __init__(self, hidden):

"""

:param hidden: BERT model output size

"""

super().__init__()

self.linear = nn.Linear(hidden, 2)

# 这里采用了logsoftmax代替了softmax,

# 当softmax值远离真实值的时候梯度也很小,logsoftmax的梯度会更好些

self.softmax = nn.LogSoftmax(dim=-1)

def forward(self, x):

return self.softmax(self.linear(x[:, 0]))

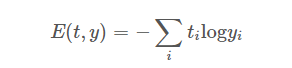

损失函数

负对数最大似然损失(negative log likelihood),也叫交叉熵(Cross-Entropy)公式:

代码:

# 在Pytorch中 CrossEntropyLoss()等于NLLLoss+ softmax,因此如果用CrossEntropyLoss最后一层就不用softmax了

criterion = nn.NLLLoss(ignore_index=0)

# 2-1. NLL(negative log likelihood) loss of is_next classification result

next_loss = criterion(next_sent_output, data["is_next"])

# 2-2. NLLLoss of predicting masked token word

mask_loss = criterion(mask_lm_output.transpose(1, 2), data["bert_label"])

# 2-3. Adding next_loss and mask_loss : 3.4 Pre-training Procedure

loss = next_loss + mask_loss

语言模型训练

代码:

class BERTLM(nn.Module):

"""

BERT Language Model

Next Sentence Prediction Model + Masked Language Model

"""

def __init__(self, bert: BERT, vocab_size):

"""

:param bert: BERT model which should be trained

:param vocab_size: total vocab size for masked_lm

"""

super().__init__()

self.bert = bert

self.next_sentence = NextSentencePrediction(self.bert.hidden)

self.mask_lm = MaskedLanguageModel(self.bert.hidden, vocab_size)

def forward(self, x, segment_label):

x = self.bert(x, segment_label)

return self.next_sentence(x), self.mask_lm(x)