上一篇Binder mRemote的前世今生PowerManger.isScreenOn()的调用流程已经调用到了BinderProxy.transact();

/frameworks/base/core/java/android/os/Binder.java

------> Binder.java——>BinderProxy

public boolean transact(int code, Parcel data, Parcel reply, int flags) throws RemoteException {

Binder.checkParcel(this, code, data, "Unreasonably large binder buffer");

return transactNative(code, data, reply, flags);

}

public native boolean transactNative(int code, Parcel data, Parcel reply,

int flags) throws RemoteException;

BinderProxy 的transact方法会调用JNI方法transactNative方法;

android_util_Binder.cpp

------> android_util_Binder.cpp

static const JNINativeMethod gBinderProxyMethods[] = {

/* name, signature, funcPtr */

{"pingBinder", "()Z", (void*)android_os_BinderProxy_pingBinder},

{"isBinderAlive", "()Z", (void*)android_os_BinderProxy_isBinderAlive},

{"getInterfaceDescriptor", "()Ljava/lang/String;", (void*)android_os_BinderProxy_getInterfaceDescriptor},

{"transactNative", "(ILandroid/os/Parcel;Landroid/os/Parcel;I)Z", (void*)android_os_BinderProxy_transact},

{"linkToDeath", "(Landroid/os/IBinder$DeathRecipient;I)V", (void*)android_os_BinderProxy_linkToDeath},

{"unlinkToDeath", "(Landroid/os/IBinder$DeathRecipient;I)Z", (void*)android_os_BinderProxy_unlinkToDeath},

{"destroy", "()V", (void*)android_os_BinderProxy_destroy},

};

static jboolean android_os_BinderProxy_transact(JNIEnv* env, jobject obj,

jint code, jobject dataObj, jobject replyObj, jint flags) // throws RemoteException

{

if (dataObj == NULL) {

jniThrowNullPointerException(env, NULL);

return JNI_FALSE;

}

Parcel* data = parcelForJavaObject(env, dataObj);//将Java层的Parcel对象数据转换为native Parcel

if (data == NULL) {

return JNI_FALSE;

}

Parcel* reply = parcelForJavaObject(env, replyObj);

if (reply == NULL && replyObj != NULL) {

return JNI_FALSE;

}

IBinder* target = (IBinder*)

env->GetLongField(obj, gBinderProxyOffsets.mObject);//核心核心,

//还记得这里的gBinderProxyOffsets.mObject吗?你去前一篇文章搜索,会发现

//env->SetLongField(object, gBinderProxyOffsets.mObject, (jlong)val.get());val是从Native中获取的BpBinder对象

//setLongField就是将native 的BpBinder对象保存于Java层BinderProxy的mObject对象;

//getLongField就是从BinderProxy的mObject对象对象中取出native BpBinder对象;所以target就是一个BpBinder对象;

//很多实用JNI的系统类都会有这个做法,这样就不需要每次都去底层获取

if (target == NULL) {

jniThrowException(env, "java/lang/IllegalStateException", "Binder has been finalized!");

return JNI_FALSE;

}

//printf("Transact from Java code to %p sending: ", target); data->print();

status_t err = target->transact(code, *data, reply, flags);//核心核心,调用target的transact方法,

//即调用BpBinder的transact方法;

//if (reply) printf("Transact from Java code to %p received: ", target); reply->print();

#if ENABLE_BINDER_SAMPLE

if (time_binder_calls) {

conditionally_log_binder_call(start_millis, target, code);

}

#endif

signalExceptionForError(env, obj, err, true /*canThrowRemoteException*/);

return JNI_FALSE;

}

这里IBinder* target就是Java层BinderProxy类的mObject变量,也就是上一篇文章在javaObjectForIBinder方法调用SetLongField保存的BpBinder对象;接下来就是调用BpBinder的transact方法:

/frameworks/native/libs/binder/BpBinder.cpp

------> BpBinder.cpp

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);//核心核心核心,

//这里的mHandle就是对应远程服务**引用的handle**

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

这里很简单,就是调用 IPCThreadState::self()->transact,这里的mHandle是在初始化BpBinder对象时赋值的,也就是前面我们在调用getService获取服务流程中使用readStrongBinder调用getStrongProxyForHandle(flat->handle)传入的flat->handle;这个mHandle用于标识这个服务的引用。

/frameworks/native/libs/binder/IPCThreadState.cpp

------> IPCThreadState.cpp

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();//数据校验

flags |= TF_ACCEPT_FDS;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_TRANSACTION thr " << (void*)pthread_self() << " / hand "

<< handle << " / code " << TypeCode(code) << ": "

<< indent << data << dedent << endl;

}

if (err == NO_ERROR) {

LOG_ONEWAY(">>>> SEND from pid %d uid %d %s", getpid(), getuid(),

(flags & TF_ONE_WAY) == 0 ? "READ REPLY" : "ONE WAY");

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);

//将数据转为binder_transaction_data

}

if (err != NO_ERROR) {

if (reply) reply->setError(err);

return (mLastError = err);

}

if ((flags & TF_ONE_WAY) == 0) {

#if 0

if (code == 4) { // relayout

ALOGI(">>>>>> CALLING transaction 4");

} else {

ALOGI(">>>>>> CALLING transaction %d", code);

}

#endif

if (reply) {

err = waitForResponse(reply);//核心核心核心

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

#if 0

if (code == 4) { // relayout

ALOGI("<<<<<< RETURNING transaction 4");

} else {

ALOGI("<<<<<< RETURNING transaction %d", code);

}

#endif

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BR_REPLY thr " << (void*)pthread_self() << " / hand "

<< handle << ": ";

if (reply) alog << indent << *reply << dedent << endl;

else alog << "(none requested)" << endl;

}

} else {

err = waitForResponse(NULL, NULL);

}

return err;

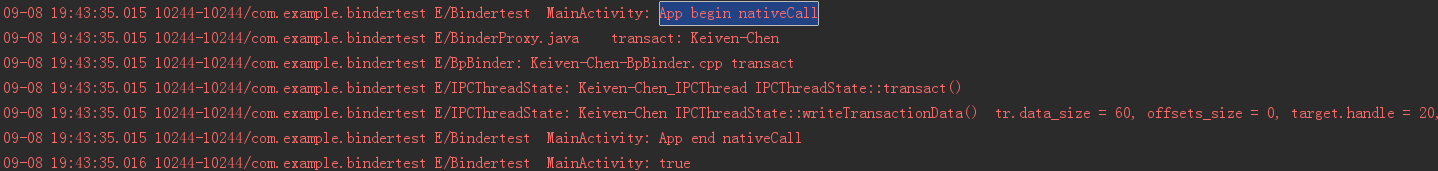

从第一篇Binder到现在,差不多一口气写了这么多,都差点忘记我写的是否正确,这是一个不好的习惯,这里我通过Log的方式来验证一下上面讲解的是否正确?

对于我添加的Log,也直接贴代码吧:

APP:

String TAG = "Bindertest MainActivity";

Log.e(TAG,"App begin nativeCall");

boolean bool = powerManager.isScreenOn();

Log.e(TAG,"App end nativeCall");

Binder.java——>BinderProxy class

public boolean transact(int code, Parcel data, Parcel reply, int flags) throws RemoteException {

Binder.checkParcel(this, code, data, "Unreasonably large binder buffer");

Log.e("BinderProxy.java transact","Keiven-Chen");//自己添加的打印Log

return transactNative(code, data, reply, flags);

}

BpBinder.cpp

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

ALOGE("Keiven-Chen-BpBinder.cpp transact");//自己添加的打印Log

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

IPCThreadState.cpp

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();

flags |= TF_ACCEPT_FDS;

if (getpid()==g_nTargetPid)//判断条件

ALOGE("Keiven-Chen_IPCThread IPCThreadState::transact()");//自己添加的打印Log,这里使用getpid()==g_nTargetPid过滤条件,

//因为IPCThreadState::transact在系统中会被很多进程频繁调用,如果不加过滤将会很多Log输出,不便于跟踪分析;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_TRANSACTION thr " << (void*)pthread_self() << " / hand "

<< handle << " / code " << TypeCode(code) << ": "

<< indent << data << dedent << endl;

}

......

}

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

......

if(getpid()==g_nTargetPid)//判断条件

ALOGE("Keiven-Chen IPCThreadState::writeTransactionData() tr.data_size = %d, offsets_size = %d,

target.handle = %d, data.ipcObjects() = %d, ipcObjectsCount() = %d",

tr.data_size, tr.offsets_size, tr.target.handle, data.ipcObjects(), data.ipcObjectsCount());

//自己添加的打印Log,过滤进程号为getpid()==g_nTargetPid的Log(因为writeTransactionData方法被系统频繁调用);

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}

这里我添加的Log就是根据前一篇的mRemote的追踪来添加的,Log说明前面的分析应该没问题;

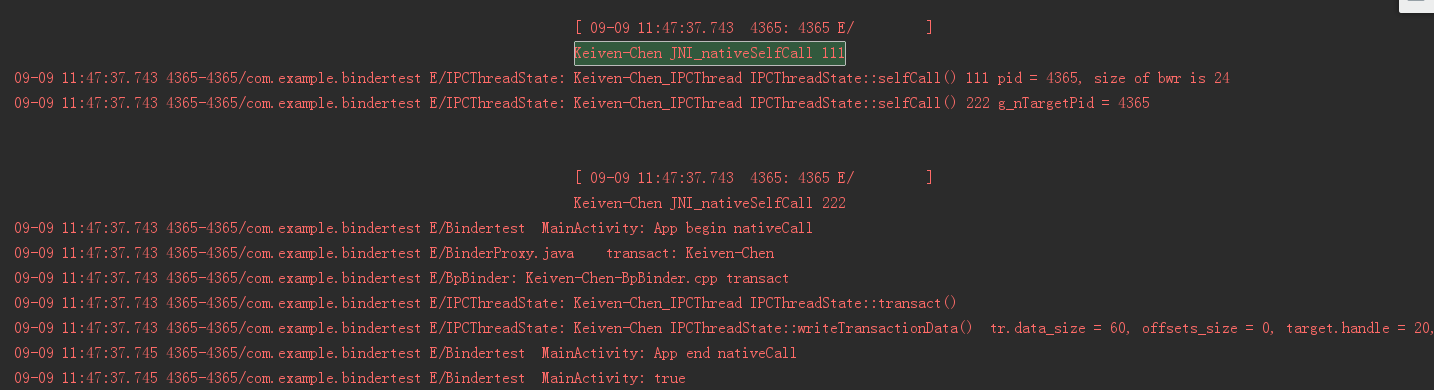

需要清楚一件事,Binder通信调用到 IPCThreadState::transact方法时还没有跨进程,而是还在Client进程内;上述代码中getpid()方法用于获取到当前Client进程ID(PID),那g_nTargetPid从哪里来呢??? g_nTargetPid是我在Client中调用IPCThreadState的方法,然后在IPCThreadState中记录的Client进程号(PID);调用IPCThreadState的什么方法能记录下这个PID呢,肯定不是每个Client都默认调用的,而只在我的Client中才调用?这肯定就需要我们自己在IPCThreadState.cpp中添加特定方法来记录这个g_nTargetPid,这个方法也很简单,就是给g_nTargetPid赋值(g_nTargetPid = getpid()),这样我的client再次进入 IPCThreadState::transact时就能通过比较g_nTargetPid和getpid()的值来判断是否是我的client进程;这个就是在前一篇中中调用的processInfo.nativeSelfCall()来实现,nativeSelfCall是一个JNI,在该方法的实现中调用IPCThreadState::selfCall(),在selfCall()方法中记录实现g_nTargetPid = getpid()就能记录我们的Client进程ID了;

APP 部分

findViewById(R.id.mybtn).setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

ProcessInfo processInfo = new ProcessInfo();

processInfo.nativeSelfCall();//JNI调用IPCThreadState.cpp的selfCall

PowerManager powerManager = (PowerManager) getSystemService(Context.POWER_SERVICE);

Log.e(TAG,"App begin nativeCall");

boolean bool = powerManager.isScreenOn();

Log.e(TAG,"App end nativeCall");

Log.e(TAG,"" + bool);

}

});

ProcessInfo.java 的全包名必须是com.example.bindservice.ProcessInfo.java

public class ProcessInfo {

static {

System.loadLibrary("jnidemo");

}

public native void nativeSelfCall();

}

JNI 部分

jni 可以在系统中很多地方创建,这里我放在 /frameworks/native/libs目录下:

------> /frameworks/native/libs/jnidemo/ProcessInfo.cpp

static void JNI_nativeSelfCall(JNIEnv* env, jobject thiz)//实现JNI方法

{

ALOGE("Keiven-Chen JNI_nativeSelfCall 111");//Log

IPCThreadState::self()->selfCall();//核心调用,调用IPCThreadState.cpp中自己实现的方法

ALOGE("Keiven-Chen JNI_nativeSelfCall 222");

}

static JNINativeMethod gMethods[] = {

{"nativeSelfCall", "()V", (void*)JNI_nativeSelfCall}, //绑定JNI方法

};

JNIEXPORT jint JNICALL JNI_OnLoad(JavaVM *jvm, void* reserved) {

JNIEnv* env = NULL;

jint result = -1;

if ((jvm)->GetEnv((void**) &env, JNI_VERSION_1_4) != JNI_OK)

{

return -1;

}

jclass clazz = (env)->FindClass("com/example/bindservice/ProcessInfo");//绑定Java类,

//必须在这个全类名中声明nativeSelfCall,这里指定了使用该JNI的Java类,所以我的ProcessInfo必须要要有上述包名;

if (clazz)

{

if((env)->RegisterNatives(clazz, gMethods, sizeof(gMethods) / sizeof(gMethods[0])) < 0)//注册JNI

ALOGE("Keiven-Chen RegisterNatives natives NOT ok");

else

ALOGE("Keiven-Chen RegisterNatives natives ok");

}

else

ALOGE("Keiven-Chen could not find class");

result = JNI_VERSION_1_4;

return result;

}

编译JNI脚本

LOCAL_PATH := $(call my-dir)

include $(CLEAR_VARS)

LOCAL_LDLIBS := -lm -llog

LOCAL_MODULE := libjnidemo //生成jnidemo.so库

LOCAL_SHARED_LIBRARIES := liblog libcutils libutils libbinder

LOCAL_SRC_FILES := ProcessInfo.cpp

include $(BUILD_SHARED_LIBRARY)

这里看到JNI其实很简单,就是**调用 IPCThreadState::self()->selfCall();**,需要去IPCThreadState.cpp中实现selfCall方法;JNI知识可以参见之前的文章JNI/NDK;

IPCThreadState.cpp

------> IPCThreadState.cpp

void IPCThreadState::selfCall()

{

ALOGE("Keiven-Chen_IPCThread IPCThreadState::selfCall() 111 pid = %d, size of bwr is %d",

getpid(), sizeof(binder_write_read));

ioctl(mProcess->mDriverFD, 123456, NULL);//这里向Binder驱动传递命令数据,用于跟踪内核驱动,后续再说

g_nTargetPid = getpid();/这里为g_nTargetPid 赋值,用于保存当前进程ID,后续通过该进程ID来过滤LOG

ALOGE("Keiven-Chen_IPCThread IPCThreadState::selfCall() 222");

}

根据在应用Activity中的逻辑,程序的执行流程是先执行JNI调用IPCThreadState.cpp的selfCall,先执行该方法,此时得到我的应用进程号(PID)g_nTargetPid = getpid()=4385,在根据这个PID在IPCThreadState.cpp中过滤掉其他跨进程调用transact方法Log;下面是完整的Log截图,先调用selfCall,再调用isScreenOn。

到这里,对于APP层是如何调用到IPCThreadState.cpp的transact应该很清晰了,接下来从IPCThreadState的transact继续往下跟踪;

IPCThreadState::transact方法中核心就是调用waitForResponse方法:

------> IPCThreadState.cpp

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();

flags |= TF_ACCEPT_FDS;

if (getpid()==g_nTargetPid)

ALOGE("Keiven-Chen_IPCThread IPCThreadState::transact()");

......

if (reply) {

err = waitForResponse(reply);//核心调用

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

......

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

int32_t cmd;

int32_t err;

//if((g_nTargetPid>0) && (getpid()==g_nTargetPid)) //添加Log用于过滤

//ALOGE("11111 IPCThreadState::waitForResponse() mOut.data %d", *(int*)(mOut.data()));

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break; //核心调用,和驱动沟通

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = mIn.readInt32();//从mIn中获取Binder 驱动返回命令

switch (cmd) { //根据驱动不同的返回值执行不同操作

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

case BR_DEAD_REPLY:

err = DEAD_OBJECT;

goto finish;

case BR_FAILED_REPLY:

err = FAILED_TRANSACTION;

goto finish;

case BR_ACQUIRE_RESULT:

{

ALOG_ASSERT(acquireResult != NULL, "Unexpected brACQUIRE_RESULT");

const int32_t result = mIn.readInt32();

if (!acquireResult) continue;

*acquireResult = result ? NO_ERROR : INVALID_OPERATION;

}

goto finish;

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY");

if (err != NO_ERROR) goto finish;

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer, this);

} else {

err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer);

freeBuffer(NULL,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

}

} else {

freeBuffer(NULL,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

continue;

}

}

goto finish;

default:

err = executeCommand(cmd); //核心调用,用于处理驱动返回cmd

if (err != NO_ERROR) goto finish;

break;

}

}

finish:

if (err != NO_ERROR) {

if (acquireResult) *acquireResult = err;

if (reply) reply->setError(err);

mLastError = err;

}

return err;

}

......

waitForResponse的核心就是调用talkWithDriver,talkWithDriver真正和驱动程序打交道;waitForResponse还需要处理驱动返回值,根据Binder 驱动回传的cmd执行其他操作;

------> IPCThreadState.cpp

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();//mOut数据在前面的writeTransactionData中初始化,bwr是用于和Binder驱动通信的结构体;

// This is what we'll read.

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;//没有读写数据

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

IF_LOG_COMMANDS() {

alog << "About to read/write, write size = " << mOut.dataSize() << endl;

}

#if defined(HAVE_ANDROID_OS)

//if(getpid()==g_nTargetPid)

// ALOGE("Keiven-Chen IPCThreadState::talkWithDriver() now into ioctl");

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0) //通过ioctl不停的读写操作,跟Binder Driver进行通信

err = NO_ERROR;

else

err = -errno;

//if(getpid()==g_nTargetPid)

// ALOGE("Keiven-Chenkai IPCThreadState::talkWithDriver() now out of ioctl");

#else

err = INVALID_OPERATION;

#endif

if (mProcess->mDriverFD <= 0) {

err = -EBADF;

}

} while (err == -EINTR);

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else

mOut.setDataSize(0);

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

return NO_ERROR;

}

return err;

}

binder_write_read结构体用来与Binder设备交换数据的结构, 通过ioctl与mDriverFD通信,是真正与Binder驱动进行数据读写交互的过程。

talkWithDriver的核心就是调用ioctl与mDriverFD通信,具体这个ioctl是如何实现的呢?ioctl其实是一个SysCall,这里记录一下ioctl的这个SysCall的调用流程;

IPCThreadState.cpp

ioctl(#include <sys/ioctl.h>)

=====>

ioctl.h(bionic/libc/include/sys/ioctl.h)

__BEGIN_DECLS

extern int ioctl(int, int, ...);

__END_DECLS

======>

bionic/ioctl.cpp(/bionic/libc/bionic/ioctl.c)

#include <stdarg.h>

extern int __ioctl(int, int, void *);

int ioctl(int fd, int request, ...)

{

va_list ap;

void * arg;

va_start(ap, request);

arg = va_arg(ap, void *);

va_end(ap);

return __ioctl(fd, request, arg);

}

======>

/bionic/libc/arch-arm/syscalls/__ioctl.S

#include <private/bionic_asm.h>

ENTRY(__ioctl)

mov ip, r7

ldr r7, =__NR_ioctl //__NR_ioctl 是ioctl的系统调用号

swi #0 //软中断命令

mov r7, ip

cmn r0, #(MAX_ERRNO + 1)

bxls lr

neg r0, r0

b __set_errno_internal

END(__ioctl)

__NR_ioctl 是在/kernel/include/uapi/asm-generic/unistd.h中定义的宏

------> unistd.h

/* fs/ioctl.c */ //说明sys_ioctl在这个文件中实现

#define __NR_ioctl 29

__SC_COMP(__NR_ioctl, sys_ioctl, compat_sys_ioctl)

------> kernel/arch/arm/kernel/calls.S

CALL(sys_ni_syscall) /* was sys_lock */

CALL(sys_ioctl) //调用sys_ioctl

------> /kernel/include/linux/syscalls.h

#define SYSCALL_DEFINE0(sname) \

SYSCALL_METADATA(_##sname, 0); \

asmlinkage long sys_##sname(void)

#define SYSCALL_DEFINE1(name, ...) SYSCALL_DEFINEx(1, _##name, __VA_ARGS__)

#define SYSCALL_DEFINE2(name, ...) SYSCALL_DEFINEx(2, _##name, __VA_ARGS__)

#define SYSCALL_DEFINE3(name, ...) SYSCALL_DEFINEx(3, _##name, __VA_ARGS__)

#define SYSCALL_DEFINE4(name, ...) SYSCALL_DEFINEx(4, _##name, __VA_ARGS__)

#define SYSCALL_DEFINE5(name, ...) SYSCALL_DEFINEx(5, _##name, __VA_ARGS__)

#define SYSCALL_DEFINE6(name, ...) SYSCALL_DEFINEx(6, _##name, __VA_ARGS__)

#define SYSCALL_DEFINEx(x, sname, ...) \

SYSCALL_METADATA(sname, x, __VA_ARGS__) \

__SYSCALL_DEFINEx(x, sname, __VA_ARGS__)

#define __PROTECT(...) asmlinkage_protect(__VA_ARGS__)

#define __SYSCALL_DEFINEx(x, name, ...) \

asmlinkage long sys##name(__MAP(x,__SC_DECL,__VA_ARGS__)); \ //我们的 sys_ioctl是从这里展开的

static inline long SYSC##name(__MAP(x,__SC_DECL,__VA_ARGS__)); \

asmlinkage long SyS##name(__MAP(x,__SC_LONG,__VA_ARGS__)) \

{ \

long ret = SYSC##name(__MAP(x,__SC_CAST,__VA_ARGS__)); \

__MAP(x,__SC_TEST,__VA_ARGS__); \

__PROTECT(x, ret,__MAP(x,__SC_ARGS,__VA_ARGS__)); \

return ret; \

} \

SYSCALL_ALIAS(sys##name, SyS##name); \

static inline long SYSC##name(__MAP(x,__SC_DECL,__VA_ARGS__))

asmlinkage long sys_ioctl(unsigned int fd, unsigned int cmd,

unsigned long arg);

asmlinkage是gcc标签,代表函数读取的参数来自于栈中,而非寄存器。

由上述代码,知道我们的ioctl是通过SYSCALL_DEFINE3来定义的,到kernel/fs/ioctl.c中;

------> ioctl.c

SYSCALL_DEFINE3(ioctl, unsigned int, fd, unsigned int, cmd, unsigned long, arg)

{

int error;

struct fd f = fdget(fd);//我们在IPCThreadState.cpp中传递的mDriverFD,代表/dev/binder设备

if (!f.file)

return -EBADF;

error = security_file_ioctl(f.file, cmd, arg);

if (!error)

error = do_vfs_ioctl(f.file, fd, cmd, arg);

fdput(f);

return error;

}

这里我们知道我们的ioctl将会怎么调用,这里ioctl的完整调用流程如下:

ioctl()→do_vfs_ioctl()→vfs_ioctl()→f_op->unlocked_ioctl()->binder_ioctl()

------> /kernel/drivers/staging/android/binder.c

static const struct file_operations binder_fops = {

.owner = THIS_MODULE,

.poll = binder_poll,

.unlocked_ioctl = binder_ioctl,

.compat_ioctl = binder_ioctl,

.mmap = binder_mmap,

.open = binder_open,

.flush = binder_flush,

.release = binder_release,

};

static struct miscdevice binder_miscdev = {

.minor = MISC_DYNAMIC_MINOR,

.name = "binder",//注册binder设备为杂项设备/dev/binder

.fops = &binder_fops

};

这里理清了IPCThreadState.cpp中的ioctl是如何调用到驱动binder.c的binder_ioctl;

Binder驱动层有无数大神讲过,比如老罗,袁神;为了文章的完整性,粗略提一下Binder内核驱动处理流程;上面讲到talkWithDriver方法中会传递BINDER_WRITE_READ cmd给内核,内核中会根据这个cmd来操作驱动;核心就是根据是否有数据和Binder驱动交互来调用binder_thread_write或者binder_thread_read方法;binder_thread_write方法中会调用binder_transaction方法处理cmd 为BC_TRANSACTION 和BC_REPLY;binder_transaction会根据根据处理结果返回BR_xxx,Server端waitForResponse会根据BR_xxx进行不同的处理;

------>/kernel/drivers/staging/android/binder.c binder_ioctl

switch (cmd) {

case BINDER_WRITE_READ: {

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto err;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d write %lld at %016llx, read %lld at %016llx\n",

proc->pid, thread->pid,

(u64)bwr.write_size, (u64)bwr.write_buffer,

(u64)bwr.read_size, (u64)bwr.read_buffer);

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread, bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

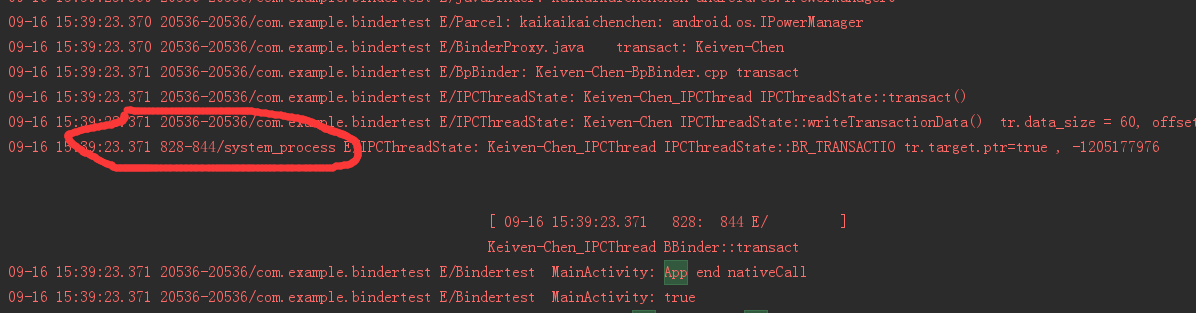

Client端将handle,cmd,data,code等数据封装到binder_transaction_data,再封装到binder_write_read结构体,调用ioctl和驱动交互,驱动调用binder_thread_write和binder_thread_read处理相关事务;IPCThreadState.cpp:BC_TRANSACTION ——> binder.c:binder_ioctl ——> IPCThreadState.cpp:BR_TRANSACTION

下图借鉴袁神:

------> IPCThreadState.cpp waitForResponse ---> executeCommand

switch (cmd) {

......

case BR_TRANSACTION:

......

if (tr.target.ptr) {

sp<BBinder> b((BBinder*)tr.cookie);//这里的BBinder 对象b,cookie域存放的是是Binder对象

////核心核心

ALOGE("Keiven-Chen_IPCThread IPCThreadState::BR_TRANSACTIO tr.target.ptr=true , %d \n",tr.target.ptr);

error = b->transact(tr.code, buffer, &reply, tr.flags);//调用BBinder对象的transact方法;

} else {

ALOGE("Keiven-Chen_IPCThread IPCThreadState::BR_TRANSACTIO tr.target.ptr=false \n");

error = the_context_object->transact(tr.code, buffer, &reply, tr.flags);

}

}

BR_TRANSACTION是由Server端处理,所以这里已经切换到了Server进程;后续的流程都是在Server进程中处理;

Server进程调用BBinder的transact方法,BBinder的transact会调用onTransact方法,实现BBinder的地方是在JavaBBinder ,所以最终会调用JavaBBinder 的onTransact方法,JavaBBinder定义在android_util_Binder.cpp中;

------> Binder.cpp class BBinder

status_t BBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

data.setDataPosition(0);

ALOGE("Keiven-Chen_IPCThread BBinder::transact \n");

status_t err = NO_ERROR;

switch (code) {

case PING_TRANSACTION:

reply->writeInt32(pingBinder());

break;

default:

err = onTransact(code, data, reply, flags);//这里onTransact被子类实现,调用子类的onTransact方法

break;

}

if (reply != NULL) {

reply->setDataPosition(0);

}

return err;

}

------> android_util_Binder.cpp

class JavaBBinder : public BBinder

virtual status_t onTransact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags = 0)

{

JNIEnv* env = javavm_to_jnienv(mVM);

IPCThreadState* thread_state = IPCThreadState::self();

jboolean res = env->CallBooleanMethod(mObject, gBinderOffsets.mExecTransact,//核心核心,

//调用Binder.java 的execTransact方法,从这个方法调用到Java 层Stub 的onTransact方法

code, reinterpret_cast<jlong>(&data), reinterpret_cast<jlong>(reply), flags);

if (thread_state->getStrictModePolicy() != strict_policy_before) {

set_dalvik_blockguard_policy(env, strict_policy_before);

}

// Need to always call through the native implementation of

// SYSPROPS_TRANSACTION.

if (code == SYSPROPS_TRANSACTION) {

BBinder::onTransact(code, data, reply, flags);

}

}

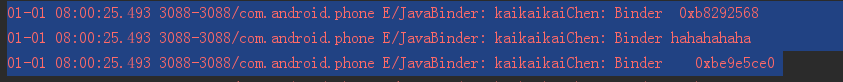

看到这里其实我是有疑问的,为何一定是走JavaBBinder的onTransact方法,这需要从注册服务说起;

------> ServiceManagerNative.java class ServiceManagerProxy

public void addService(String name, IBinder service, boolean allowIsolated)

throws RemoteException {

Parcel data = Parcel.obtain();

Parcel reply = Parcel.obtain();

data.writeInterfaceToken(IServiceManager.descriptor);//每个服务在这里写入的descriptor都是固定的

//这里是Parcel写入,我在Parcel JNI的android_os_Parcel_writeInterfaceToken中调用android_util_Binder.cpp中的testString方法

data.writeString(name);

data.writeStrongBinder(service);//这里写入的service

data.writeInt(allowIsolated ? 1 : 0);

mRemote.transact(ADD_SERVICE_TRANSACTION, data, reply, 0);

reply.recycle();

data.recycle();

}

------> android_os_Parcel.cpp

static void android_os_Parcel_writeInterfaceToken(JNIEnv* env, jclass clazz, jlong nativePtr,

jstring name)

{

Parcel* parcel = reinterpret_cast<Parcel*>(nativePtr);

if (parcel != NULL) {

// In the current implementation, the token is just the serialized interface name that

// the caller expects to be invoking

const jchar* str = env->GetStringCritical(name, 0);

if (str != NULL) {

testString(String16(str, env->GetStringLength(name)));//调用android_util_Binder.cpp中自己实现的方法,

//将name传递到android_util_Binder用于判断ibinderForJavaObject 内部调用过程

parcel->writeInterfaceToken(String16(str, env->GetStringLength(name)));

env->ReleaseStringCritical(name, str);

}

}

}

static void android_os_Parcel_writeStrongBinder(JNIEnv* env, jclass clazz, jlong nativePtr, jobject object)

{

Parcel* parcel = reinterpret_cast<Parcel*>(nativePtr);

if (parcel != NULL) {

const status_t err = parcel->writeStrongBinder(ibinderForJavaObject(env, object));

//ibinderForJavaObject方法在android_util_Binder.java中实现

if (err != NO_ERROR) {

signalExceptionForError(env, clazz, err);

}

}

}

------> android_util_Binder.cpp

const char *namePower = NULL;

void testString(const String16& str){//自己添加的方法,注册方法的时候调用writeInterfaceToken时调用,便于过滤Log

namePower = String8(str).string();

ALOGE("kaikaikaichenchen %s \n", namePower);

}

sp<IBinder> ibinderForJavaObject(JNIEnv* env, jobject obj)

{

if (obj == NULL) return NULL;

if (env->IsInstanceOf(obj, gBinderOffsets.mClass)) {

JavaBBinderHolder* jbh = (JavaBBinderHolder*)

env->GetLongField(obj, gBinderOffsets.mObject);

if (strcmp(namePower, "android.os.IPowerManager") == 0)

{

ALOGE("kaikaikaiChen: Binder %p",jbh);

ALOGE("kaikaikaiChen: Binder hahahahaha");//Log 标记,便于跟踪,Log 如下面截图

ALOGE("kaikaikaiChen: Binder %p ", obj);

}

return jbh != NULL ? jbh->get(env, obj) : NULL;//调用JavaBBinderHolder的get方法,

//即创建JavaBBinder,这里确定注册IPowerManager时走的是这里

}

if (env->IsInstanceOf(obj, gBinderProxyOffsets.mClass)) {

if (strcmp(namePower, "android.os.IPowerManager") == 0)

ALOGE("kaikaikaiChen: BinderProxy %p ", obj);//根据Log来判断,这个返回的到底是什么值

return (IBinder*)

env->GetLongField(obj, gBinderProxyOffsets.mObject);

}

ALOGW("ibinderForJavaObject: %p is not a Binder object", obj);

return NULL;

}

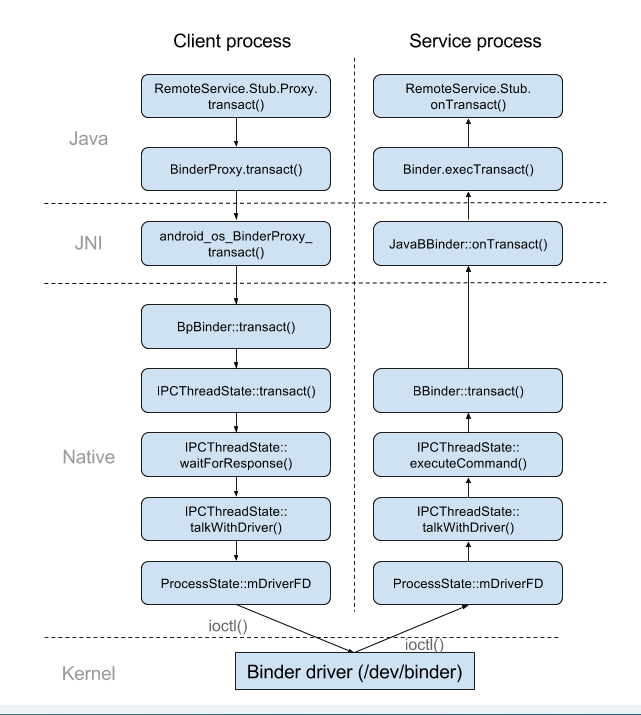

综上:所以这里会调用JavaBBinder的onTransact方法,JavaBBinder的onTransact会调用Binder.java 的execTransact,execTransact方法调用onTransact方法(onTransact方法被子类实现,这里会调用子类的onTransact方法),IPowerManger.Stub 继承自Binder,实现了onTransact方法,所以最终会调用IPowerManger.Stub的onTransact方法,Binder的完整调用流程如下图,下图借鉴与袁神与网络大神:

上面两张图诠释了Binder的主线;

经过上述一大堆铺垫,我们PowerManger.isScreenOn()调用流程将会走到IPowerManager.Stub的onTransact方法,onTransact根据code来执行不同方法,这里isScreenOn的code为TRANSACTION_isInteractive:

------> IPowerManager.java class Stub

case TRANSACTION_isInteractive: {

data.enforceInterface(DESCRIPTOR);

boolean _result = this.isInteractive();

reply.writeNoException();

reply.writeInt(((_result) ? (1) : (0)));

return true;

}

public boolean isInteractive() throws android.os.RemoteException;

PowerManagerService继承自IPowerManager.Stub, 即isInteractive方法在PowerManagerService.java中实现,这里会调用PowerManagerService.java的isInteractive;这里阐述了从Client端transact —>Binder驱动—>Server端onTransact的全过程,到此一个完整的Binder Call 就到此结束了;

智能硬件中很多有很多需要Native Service,推荐一篇Chloe_Zhang的Native Service

Native Service 实现步骤如下:

1.实现一个接口文件,IXXXService,继承IInterface

2.定义BnXXX,继承BnInterface。实现一个XXXService,继承BnXXX,并实现onTransact()函数。

3.定义BpXXX,继承BpInterface。