Gradient Boosing trains additional models on negative gradient residual

from __future__ import division

import numpy as np

import pandas as pd

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.datasets import load_boston

import ml_metrics as mtr

import matplotlib.pyplot as plt

%matplotlib inline

data = load_boston()

X = data.data

y = data.target

class myGradientBoostingRegression():

def predict(self, X):

p = np.zeros(X.shape[0])

for i in range(len(self.learners)):

dt = self.learners[i]

c = self.coefs[i]

p += c*dt.predict(X)

return p

def fit(self, X, y, n_estimators, max_depth):

self.learners = []

self.coefs = []

for n in range(n_estimators):

if n == 0:

dt = DecisionTreeRegressor(max_depth=max_depth)

dt.fit(X,y)

self.learners.append(dt)

self.coefs.append(1)

else:

p = self.predict(X)

negtive_gradient_loss = y-p

dt = DecisionTreeRegressor(max_depth=max_depth)

dt.fit(X, negtive_gradient_loss)

self.learners.append(dt)

cs = np.arange(0,1,.1)

losses = [ mtr.mse(p+c*dt.predict(X), y) for c in cs]

c = cs[np.argmin(losses)]

self.coefs.append(c)

def score(self, X, y):

return mtr.mse(self.predict(X), y)

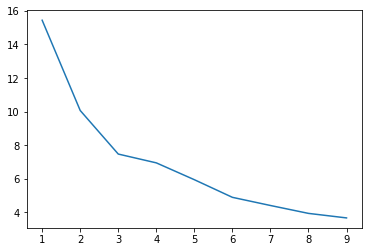

ns = range(1,10)

ss = []

for n in ns:

gb = myGradientBoostingRegression()

gb.fit(X,y,n,3)

ss.append(gb.score(X,y))

plt.plot(ns,ss)