文章目录

- 前言

- 一、首先分析网页

- 二、编写代码

- 总结

前言

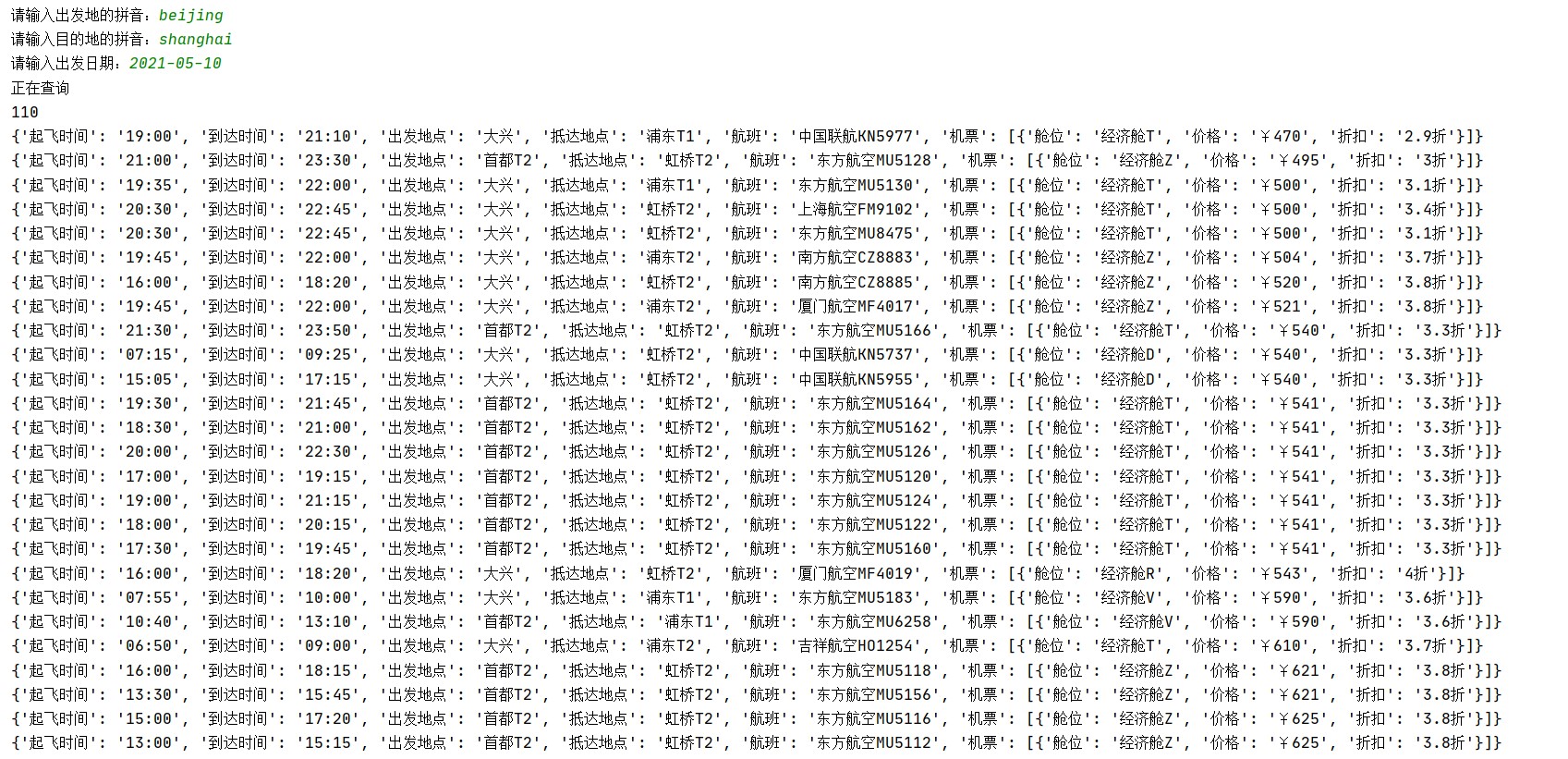

看了这么多篇selenium,我们来一篇简单实用的。坐飞机是比较方便的一种出行方式了,价格一般情况下和高铁票也差距不大,甚至有时候会更便宜。这次我们就来通过出发地、目的地和出行时间这三个参数来获取航班的数据。来给大家看一看效果。

嗯,北京到上海2021年5月10日的航班有110次,还是挺多的嘛。

嗯,北京到上海2021年5月10日的航班有110次,还是挺多的嘛。

编写代码

1.分析网页

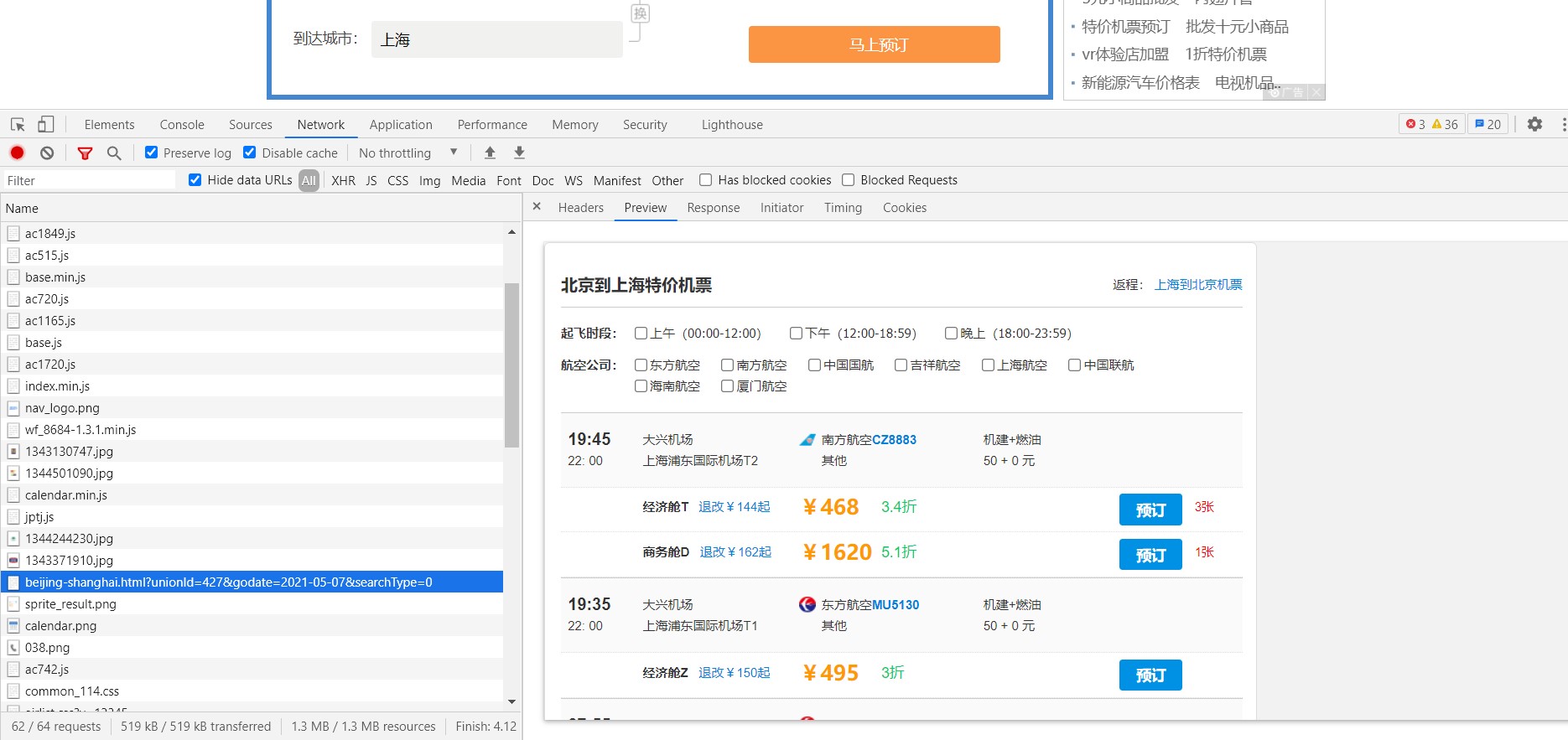

通过抓包后我们发现我们所需要的数据是在一个内嵌的网页的网页里。

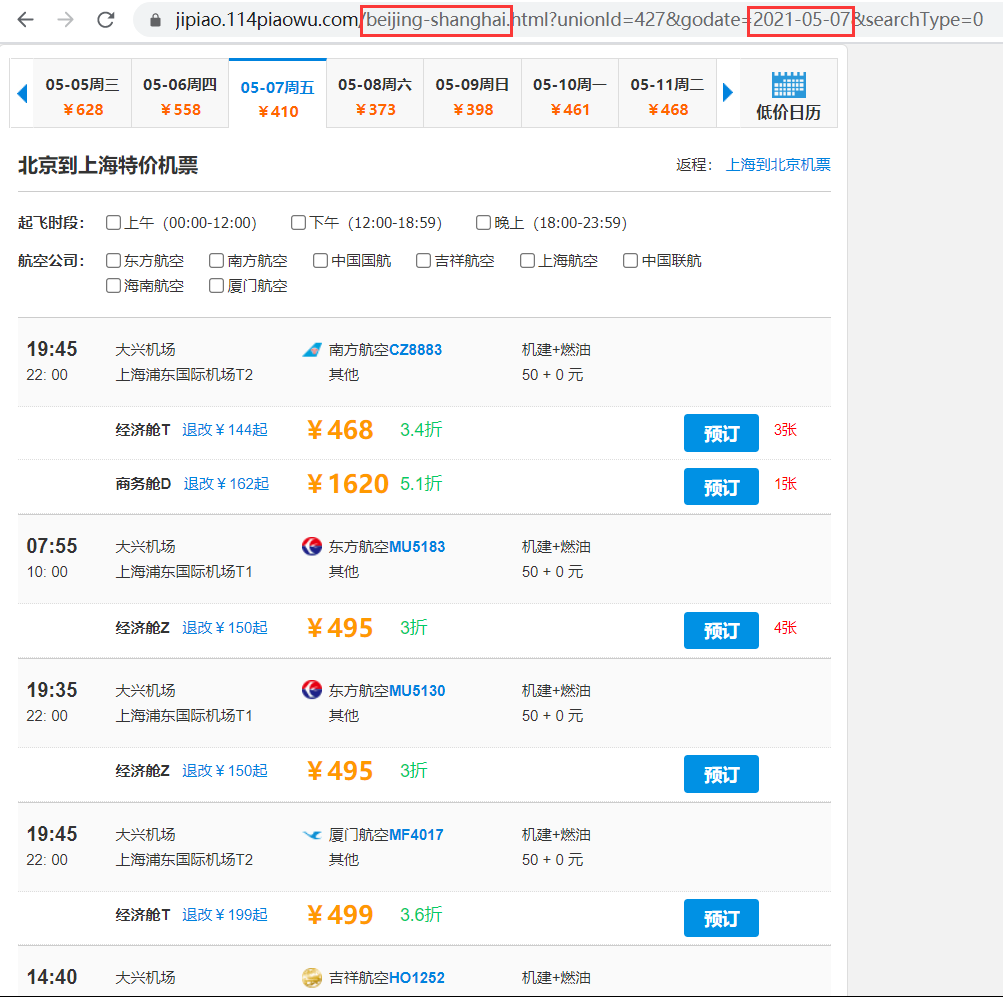

将这个页面的网址进行测试发现紧跟域名后面的就是出发地和目的地的拼音,然后后面的godate参数就是我们出发的日期。

将这个页面的网址进行测试发现紧跟域名后面的就是出发地和目的地的拼音,然后后面的godate参数就是我们出发的日期。

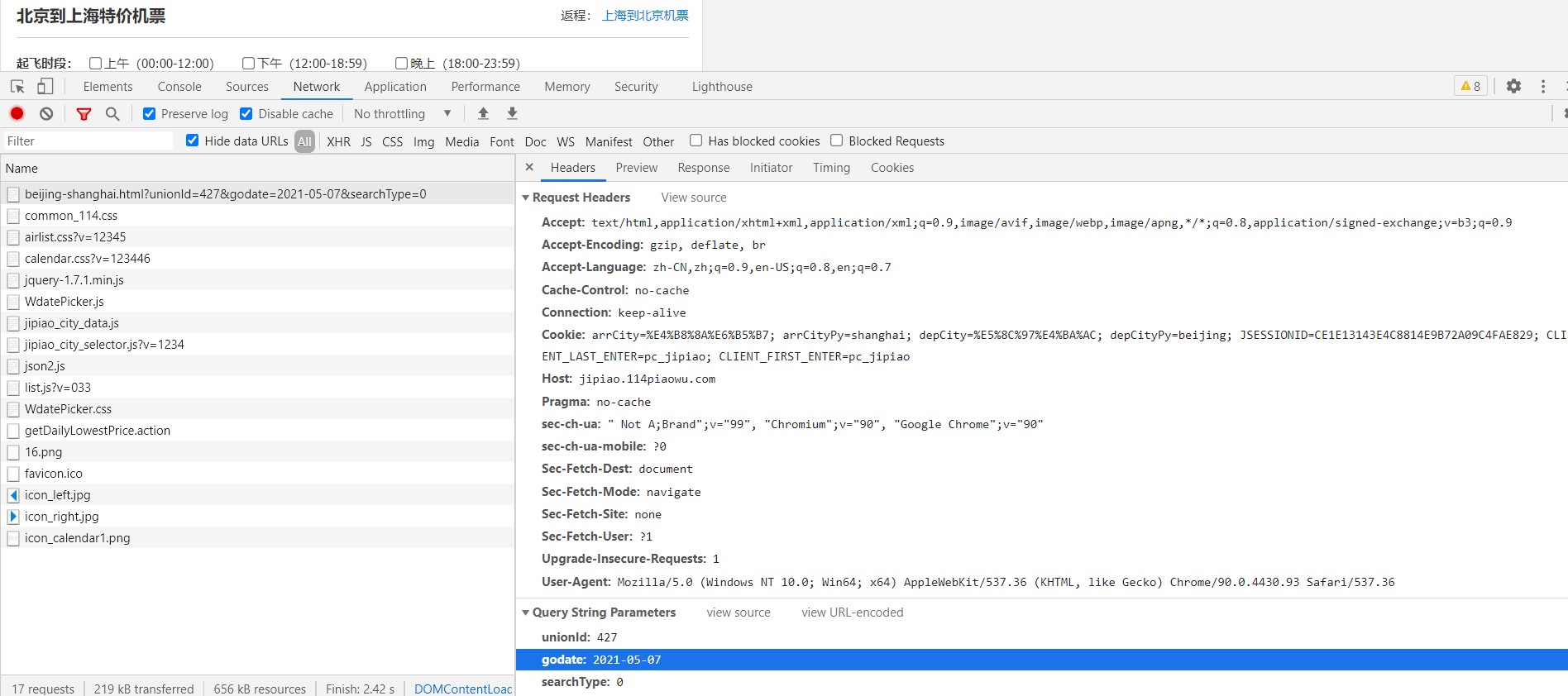

然后我们来看看这个网址的请求参数。请求头没啥注意的,能多带一些就多带一些。params的话需要注意一下的就是godate为出发日期。cookies里的arrCityPy是出发地的拼音,depCityPy是目的地的拼音。

然后我们来看看这个网址的请求参数。请求头没啥注意的,能多带一些就多带一些。params的话需要注意一下的就是godate为出发日期。cookies里的arrCityPy是出发地的拼音,depCityPy是目的地的拼音。

2.编写代码

首先是获取网页的函数。

def chaxun(start,end,date):

cookies = {

'arrCityPy': start,

'depCityPy': end,

}

headers = {

'Connection': 'keep-alive',

'Pragma': 'no-cache',

'Cache-Control': 'no-cache',

'sec-ch-ua': '^\\^',

'sec-ch-ua-mobile': '?0',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.93 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Sec-Fetch-Site': 'none',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-User': '?1',

'Sec-Fetch-Dest': 'document',

'Accept-Language': 'zh-CN,zh;q=0.9,en-US;q=0.8,en;q=0.7',

}

params = (

('unionId', '427'),

('godate', date),

('searchType', '0'),

)

response = requests.get(f'https://jipiao.114piaowu.com/{start}-{end}.html', headers=headers, params=params, cookies=cookies)

# print(response.text)

return response.content.decode('utf-8')然后是解析函数。

def jianxi(html):

xp = etree.HTML(html)

hangban_data = []

hangban_list = xp.xpath('//*[@class="jp_list"]//*[@class="mainDiv66"]')

for i in range(1,len(hangban_list)+1):

start_time = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[1]/b/text()')[0] #起飞时间

start_time = ''.join(start_time.split())

end_time = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[1]/p/text()')[0] #到达时间

end_time = ''.join(end_time.split())

start_address = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[2]/p[1]/text()')[0] #出发地点

start_address = ''.join(start_address.split())

end_address = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[2]/p[2]/text()')[0] #抵达地点

end_address = ''.join(end_address.split())

hangban = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[3]/p[1]//text()') #航班

hangban = ''.join([''.join(hangban[0].split()),''.join(hangban[1].split())])

# jijian_ranyou = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[4]/p[2]/text()')[0] #机建加燃油

# jijian_ranyou = ''.join(jijian_ranyou.split())

jipiao = [] #机票

jipiao_list = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="yd_list"]')

for j in range(1,len(jipiao_list)+1):

cangwei = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="yd_list"][{j}]/li[2]/b/text()')[0] #舱位

cangwei = ''.join(cangwei.split())

price = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="yd_list"][{j}]/li[3]/b//text()') #价格

price = ''.join([''.join(price[0].split()), ''.join(price[1].split())])

zhekou = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="yd_list"][{j}]/li[3]/em/text()')[0] #折扣

zhekou = ''.join(zhekou.split())

jipiao.append({'舱位':cangwei,'价格':price,'折扣':zhekou})

# hangban_data.append({'起飞时间':start_time,'到达时间':end_time,'出发地点':start_address,'抵达地点':end_address,'航班':hangban,'机建加燃油':jijian_ranyou,'机票':jipiao})

hangban_data.append({'起飞时间': start_time, '到达时间': end_time, '出发地点': start_address, '抵达地点': end_address, '航班': hangban,'机票': jipiao})

return hangban_data3.总的代码

#coding:utf-8

import requests

from lxml import etree

import re

import time

def chaxun(start,end,date):

cookies = {

'arrCityPy': start,

'depCityPy': end,

}

headers = {

'Connection': 'keep-alive',

'Pragma': 'no-cache',

'Cache-Control': 'no-cache',

'sec-ch-ua': '^\\^',

'sec-ch-ua-mobile': '?0',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.93 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Sec-Fetch-Site': 'none',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-User': '?1',

'Sec-Fetch-Dest': 'document',

'Accept-Language': 'zh-CN,zh;q=0.9,en-US;q=0.8,en;q=0.7',

}

params = (

('unionId', '427'),

('godate', date),

('searchType', '0'),

)

response = requests.get(f'https://jipiao.114piaowu.com/{start}-{end}.html', headers=headers, params=params, cookies=cookies)

# print(response.text)

return response.content.decode('utf-8')

def jianxi(html):

xp = etree.HTML(html)

hangban_data = []

hangban_list = xp.xpath('//*[@class="jp_list"]//*[@class="mainDiv66"]')

for i in range(1,len(hangban_list)+1):

start_time = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[1]/b/text()')[0] #起飞时间

start_time = ''.join(start_time.split())

end_time = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[1]/p/text()')[0] #到达时间

end_time = ''.join(end_time.split())

start_address = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[2]/p[1]/text()')[0] #出发地点

start_address = ''.join(start_address.split())

end_address = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[2]/p[2]/text()')[0] #抵达地点

end_address = ''.join(end_address.split())

hangban = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[3]/p[1]//text()') #航班

hangban = ''.join([''.join(hangban[0].split()),''.join(hangban[1].split())])

# jijian_ranyou = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="aboutfj"]/li[4]/p[2]/text()')[0] #机建加燃油

# jijian_ranyou = ''.join(jijian_ranyou.split())

jipiao = [] #机票

jipiao_list = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="yd_list"]')

for j in range(1,len(jipiao_list)+1):

cangwei = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="yd_list"][{j}]/li[2]/b/text()')[0] #舱位

cangwei = ''.join(cangwei.split())

price = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="yd_list"][{j}]/li[3]/b//text()') #价格

price = ''.join([''.join(price[0].split()), ''.join(price[1].split())])

zhekou = xp.xpath(f'//*[@class="jp_list"]/div[{i}]//*[@class="yd_list"][{j}]/li[3]/em/text()')[0] #折扣

zhekou = ''.join(zhekou.split())

jipiao.append({'舱位':cangwei,'价格':price,'折扣':zhekou})

# hangban_data.append({'起飞时间':start_time,'到达时间':end_time,'出发地点':start_address,'抵达地点':end_address,'航班':hangban,'机建加燃油':jijian_ranyou,'机票':jipiao})

hangban_data.append({'起飞时间': start_time, '到达时间': end_time, '出发地点': start_address, '抵达地点': end_address, '航班': hangban,'机票': jipiao})

return hangban_data

if __name__ == '__main__':

start_time = time.time()

start1 = input('请输入出发地的拼音:')

end1 = input('请输入目的地的拼音:')

date = input('请输入出发日期:')

while len(re.findall('\d{4}-\d{2}-\d{2}',date)) ==0:

date = input('请输入出发日期:')

print('正在查询')

html = chaxun(start1,end1,date)

datas = jianxi(html)

print(len(datas))

if len(datas) != 0:

for data in datas:

print(data)

else:

print('未查询到相关数据')

end_time = time.time()

print(f'运行了{end_time - start_time}秒')总结

本次的代码也没啥难度,获取的网页就像的一个静态网页,也没有什么反爬,获取数据比起携程要容易。只要把握好分析网页,获取网页,解析网页这三部曲,那么一般的网页你都可以很轻松的获取到你想要的数据。